Login / Signup

Cart

Your cart is empty

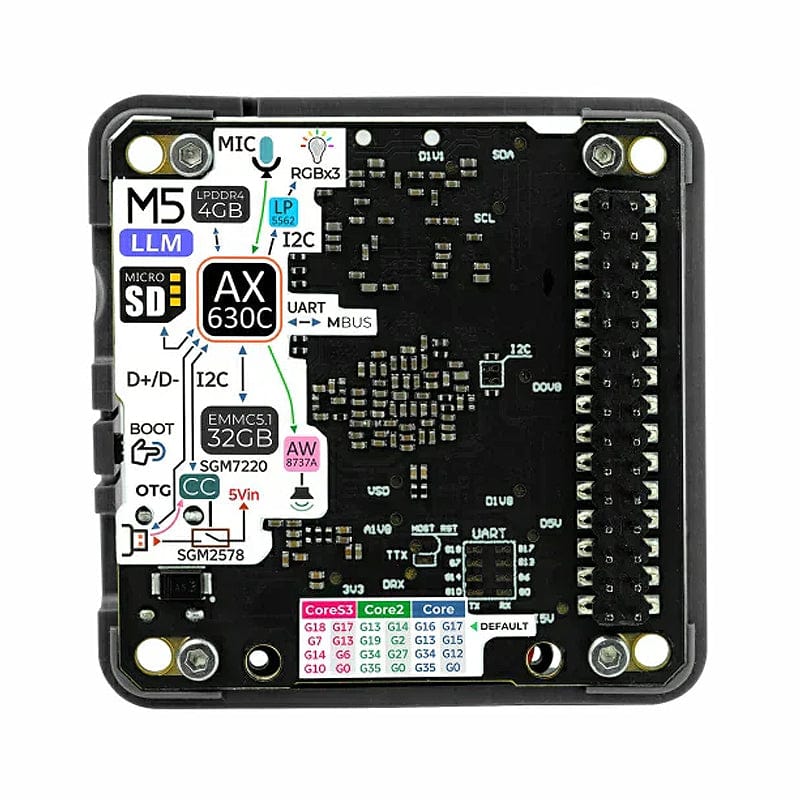

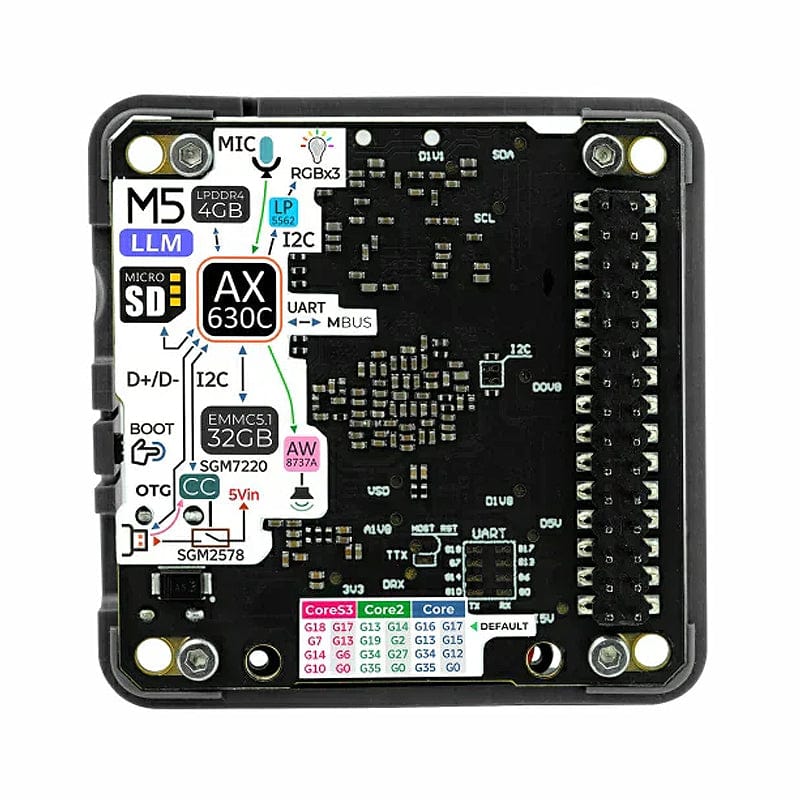

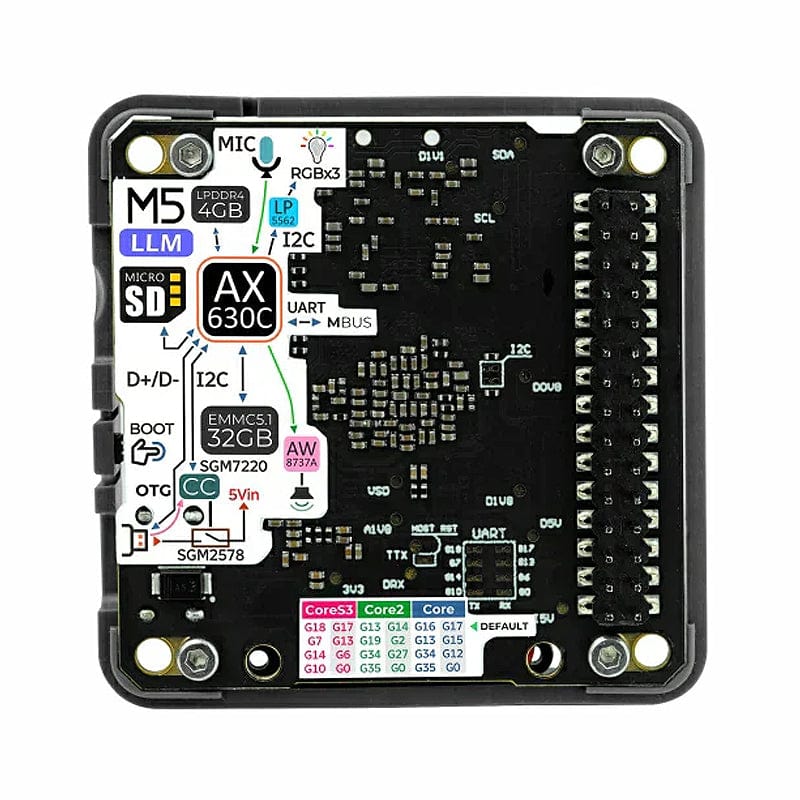

The M5Stack LLM (Large Language Model) Kit is an offline AI inference and data communication interface add-on, delivering a smooth and natural AI experience without relying on the cloud, ensuring privacy, security, and stability.

The LLM module provides a variety of interface functions to facilitate system integration and expansion. It achieves stacked power supply from a Core unit via the M5BUS interface; its built-in CH340N USB conversion chip offers USB-to-serial debugging functionality, while the USB-C interface is used for USB log output.

An RJ45 interface works with the onboard network transformer to extend to a 100 Mbps Ethernet port and core serial port (supporting SBC applications); the FPC-8P interface connects directly to the LLM module, ensuring stable serial communication. An HT3.96x9P solder pad is also reserved for DIY expansion.

The module integrates the StackFlow framework along with the Arduino/UiFlow libraries, allowing edge intelligence to be implemented with just a few lines of code. Powered by the advanced AiXin AX630C SoC processor and featuring a high-efficiency NPU delivering 3.2 TOPS with native support for Transformer models, it effortlessly handles complex AI tasks. Equipped with 4GB LPDDR4 memory (1GB for user applications and 3GB dedicated to hardware acceleration) and 32GB eMMC storage, it supports parallel multi-model loading and chained inference. With an operating power consumption of ~1.5W, it is far more energy efficient than similar products.

It's compatible with multiple models and comes pre-installed with the Qwen2.5-0.5B large language model, featuring built-in functions including KWS (wake word), ASR (speech recognition), LLM (large language model), and TTS (text-to-speech). It also supports apt-based rapid updates of software and model packages. By installing the openai-api plugin, it becomes compatible with the OpenAI standard API, supporting chat, conversation completion, speech-to-text, and text-to-speech among various application modes.

The official apt repository offers abundant large model resources, including deepseek-r1-distill-qwen-1.5b, InternVL2_5-1B-MPO, Llama-3.2-1B, Qwen2.5-0.5B, and Qwen2.5-1.5B, as well as text-to-speech models (whisper-tiny, whisper-base, melotts) and visual models (such as yolo11 and other SOTA models). The repository is continuously updated to support the most cutting-edge model applications, meeting the demands of complex AI tasks.

The kit is plug-and-play, and when paired with an M5 host, it provides an instant AI interactive experience.

Note: The models supported by the Module LLM are in a special format unique to AXERA and require special processing to be used normally. Therefore, existing models on the market cannot be used directly.

| Processor SoC | AX630C@Dual Cortex A53 1.2 GHz |

| MAX.12.8 TOPS @INT4, 3.2 TOPS @INT8 | |

| Memory | 4GB LPDDR4 (1GB system memory + 3GB dedicated to hardware acceleration) |

| Storage | 32GB eMMC5.1 |

| Communication | Serial communication, default baud rate 115200@8N1 (adjustable) |

| Microphone | MSM421A |

| Audio Driver | AW8737 |

| Speaker | 8Ω@1W, size: 2014 cavity speaker |

| Built-in Functions | KWS (wake word) ASR (speech recognition) LLM (large language model) TTS (text-to-speech) |

| RGB LED | 3x RGB LED @ 2020, driven by LP5562 (status indicator) |

| Power Consumption | No load: 5V @ 0.5W, Full load: 5V@1.5W |

| Button | Used to enter firmware download mode |

| Upgrade Interface | SD card/Type-C port |

| Conversion Chip | CH340N |

| Ethernet Interface | RJ45 interface with onboard network transformer (11FB-05NL SOP-16) |

| Serial Interfaces | FPC-8P interface, Type-C interface, RJ45 interface |

| DIY Expansion | HT3.96*9P solder pad |

| Operating Temperature | 0-40°C |