Login / Signup

Cart

Your cart is empty

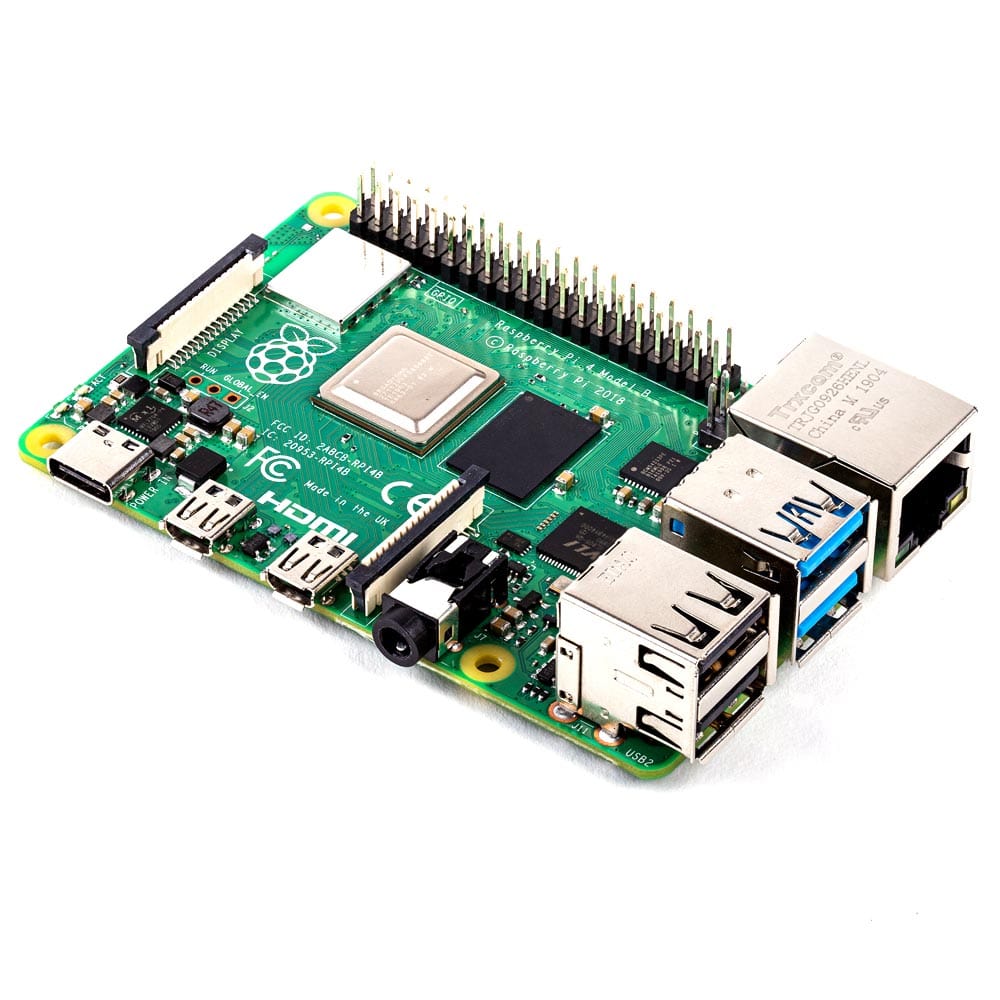

The Coral USB Accelerator is a USB accessory that brings machine learning inferencing to existing systems. It works with the Raspberry Pi and Linux, Mac, and Windows systems.

The Accelerator adds an Edge TPU coprocessor to your system, enabling high-speed machine learning inferencing on a wide range of systems, simply by connecting it to a USB port!

Performs High-speed Machine Learning Inferencing

The on-board Edge TPU coprocessor is capable of performing 4 trillion operations (tera-operations) per second (TOPS), using 0.5 watts for each TOPS (2 TOPS per watt). For example, it can execute state-of-the-art mobile vision models such as MobileNet v2 at almost 400 FPS, in a power-efficient manner. See below section for performance benchmarks.

Connects via USB to any system running Debian Linux (including Raspberry Pi), macOS, or Windows 10.

No need to build models from the ground up. TensorFlow Lite models can be compiled to run on the Edge TPU.

Easily build and deploy fast, high-accuracy custom image classification models to your device with AutoML Vision Edge.

| ML accelerator | Google Edge TPU coprocessor: 4 TOPS (int8); 2 TOPS per watt |

| Connector | USB 3.0 Type-C* (data/power) |

| Dimensions | 65 mm x 30 mm |

* Compatible with USB 2.0 but inferencing speed is much slower.

Datasheet & Resources

Performance Benchmarks

An individual Edge TPU is capable of performing 4 trillion operations (tera-operations) per second (TOPS), using 0.5 watts for each TOPS (2 TOPS per watt). How that translates to performance for your application depends on a variety of factors. Every neural network model has different demands, and if you're using the USB Accelerator device, total performance also varies based on the host CPU, USB speed, and other system resources.

With that said, the table below compares the time spent to perform a single inference with several popular models on the Edge TPU. For the sake of comparison, all models running on both CPU and Edge TPU are the TensorFlow Lite versions.

This represents a small selection of model architectures that are compatible with the Edge TPU:

Note: These figures measure the time required to execute the model only. It does not include the time to process input data (such as down-scaling images to fit the input tensor), which can vary between systems and applications. These tests are also performed using C++ benchmark tests, whereas our public Python benchmark scripts may be slower due to overhead from Python.

| Model architecture | Desktop CPU 1 |

Desktop CPU 1 + USB Accelerator (USB 3.0) with Edge TPU |

Embedded CPU 2 |

Dev Board 3 with Edge TPU |

|---|---|---|---|---|

| Unet Mv2 (128x128) |

27.7 | 3.3 | 190.7 | 5.7 |

| DeepLab V3 (513x513) |

394 | 52 | 1139 | 241 |

| DenseNet (224x224) |

380 | 20 | 1032 | 25 |

| Inception v1 (224x224) |

90 | 3.4 | 392 | 4.1 |

| Inception v4 (299x299) |

700 | 85 | 3157 | 102 |

| Inception-ResNet V2 (299x299) |

753 | 57 | 2852 | 69 |

| MobileNet v1 (224x224) |

53 | 2.4 | 164 | 2.4 |

| MobileNet v2 (224x224) |

51 | 2.6 | 122 | 2.6 |

| MobileNet v1 SSD (224x224) |

109 | 6.5 | 353 | 11 |

| MobileNet v2 SSD (224x224) |

106 | 7.2 | 282 | 14 |

| ResNet-50 V1 (299x299) |

484 | 49 | 1763 | 56 |

| ResNet-50 V2 (299x299) |

557 | 50 | 1875 | 59 |

| ResNet-152 V2 (299x299) |

1823 | 128 | 5499 | 151 |

| SqueezeNet (224x224) |

55 | 2.1 | 232 | 2 |

| VGG16 (224x224) |

867 | 296 | 4595 | 343 |

| VGG19 (224x224) |

1060 | 308 | 5538 | 357 |

| EfficientNet-EdgeTpu-S* | 5431 | 5.1 | 705 | 5.5 |

| EfficientNet-EdgeTpu-M* | 8469 | 8.7 | 1081 | 10.6 |

| EfficientNet-EdgeTpu-L* | 22258 | 25.3 | 2717 | 30.5 |

1 Desktop CPU: Single 64-bit Intel(R) Xeon(R) Gold 6154 CPU @ 3.00GHz

2 Embedded CPU: Quad-core Cortex-A53 @ 1.5GHz

3 Dev Board: Quad-core Cortex-A53 @ 1.5GHz + Edge TPU